Over the past decade there has been a rapid increase in the number of citizen science projects. Citizen science is now an accepted term for a range of practices that involve members of the general public, many of which are are not trained as scientists, in collecting, categorizing, transcribing or analyzing scientific data (Bonney et al., 2014). Successful citizen science projects include involving participants in classifying astronomical images, reporting bird sightings, counting invasive species in the field, using spatial reasoning skills to align genomes or folding proteins. These projects feature tasks that can only be performed by people (e.g., making an observation) or can be performed more accurately by people (e.g., classifying an image) with the support of computational means to organize these efforts.

As such, citizen science often relies on some form of socio-computational system (Prestopnik & Crowston, 2012). Games are one form of such socio-computational systems used in citizen science to entice and sustain participation. The list of citizen science games that people can play while contributing to science is growing. Games, and gamified websites or applications, take a range of forms within citizen science. Some projects, like MalariaSpot, include just a few game elements such as points, badges, and leaderboards. Other projects, like Foldit and Eyewire, are full immersive experiences. Still others, such as Forgotten Island, are beginning to use narrative- based gamification approaches.

Depending on the complexity of task they feature, citizen science games can be classified in microtasks and megatasks (Good & Su, 2013). Microtasks can be accomplished quickly and easily by anyone who can read some simple instructions, while megatasks concern challenging problems whose solution might take weeks or even months of effort from qualified experts. The goal of involving the general public to accomplish megatasks is to have a large pool of candidates from which to identify few talented individuals whose skills can solve challenging problems. Good and Su identified hard games as an approach to megatasks, which give players access to small numbers of extremely challenging individual problems.

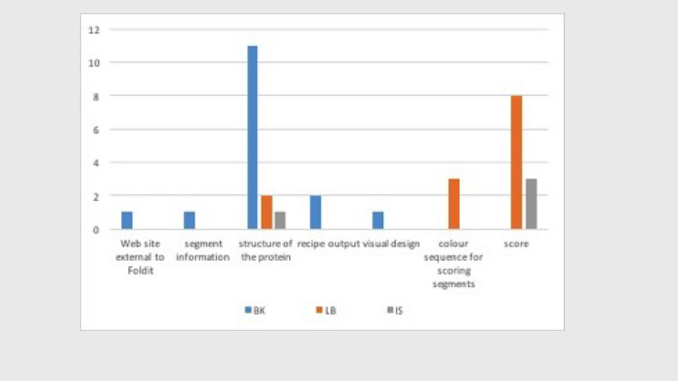

A very successful example of hard game is Foldit which invites talented players to predict three-dimensional (3D) models of proteins from their amino acid composition. Scientists analyse players’ highest scoring solutions and determine whether or not there is a native structural configuration that can be applied to relevant proteins in the real world to help eradicate diseases and create biological innovations. As skilled and talented as they can be, Foldit players would struggle to solve those complex puzzles without the support of machines. Foldit is a good example of human computation (von Ahn, 2005), since it was developed to find places where computational power is most useful and where human abilities are best applied (Cooper, 2014). Soon after the release of Foldit, players themselves strengthened human computation by requesting the addition of automation in the form of recipes, small scripts of computer code that automate some protein folding processes, to carry out their strategies more easily when solving puzzles. Over the years, the increasing use of recipes has created resentment in several players who think that recipes have taken too much place in the game and make novices think that running recipes is all they need to play the game.

However, previous findings (Ponti & Stankovic, 2015) suggest that the use of recipes allows skilled Foldit players to strengthen their role of experts rather than becoming appendices of automated gameplay. Skills are part of players’ “professional vision” (Goodwin, 1994). The main purpose of this study is to investigate players’ professional vision and interpret their use of recipes during their gameplay. The main research question is: What do players observe and do when they use recipes in their gameplay? To address this question, we examined the choices made by players solving two different kinds of puzzles, a beginner’s puzzle and an advanced one. Specifically, we studied when, how and why the players ran recipes when solving the puzzles, and what actions those recipes performed in the gameplay.

The overall goal is to contribute to the knowledge of the relationship between technology and skills in performing megatasks and to draw implications for the design of citizen science games based on human computation. Related Work To place this study in context, we thus present an overview.

Authors:

Marisa Ponti, Igor Stankovic, Wolmet Barendregt, Department of Applied Information Technology, University of Gothenburg,

Bruno Kestemont, Lyn Bain (FolditPlayers, Go Science Team)

Read the preprint on SocArXiv:

https://osf.io/preprints/socarxiv/yqw6g/